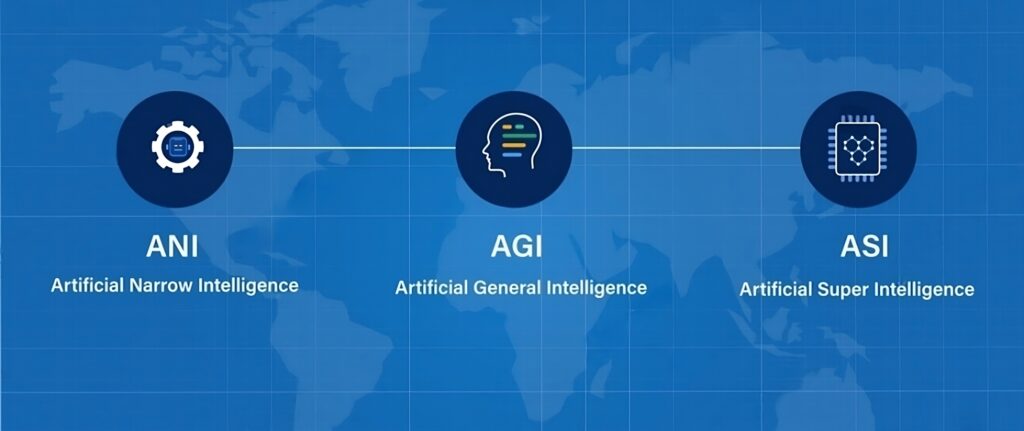

Artificial intelligence is one of the most exciting and rapidly evolving fields today. But when it comes to AGI vs ASI, things can get a little confusing.

Both AGI (Artificial General Intelligence) and ASI (Artificial Superintelligence) represent advanced stages of AI.

But what exactly is the difference between them?

In this article, we’ll explore the concepts of AGI and ASI, break down their key differences, and look at the potential impacts they could have on the future of technology and humanity.

Table of Contents

ToggleWhat is Artificial General Intelligence (AGI)?

AGI refers to AI systems that have the ability to understand, learn, and apply intelligence across a wide range of tasks—just like a human.

Unlike narrow AI (which excels at one specific task), AGI artificial intelligence is capable of performing any intellectual task that a human can do.

Think of AGI as the “jack-of-all-trades” in the AI world.

Some key features of AGI include:

- Learning and Adaptability: It can learn from experience and adapt to new situations, just like humans do.

- Problem-Solving: It can solve a variety of problems, even those it hasn’t encountered before.

- Versatility: AGI isn’t limited to a single task. It can excel at multiple tasks across different domains.

The ultimate goal of AGI is to create machines that are as intelligent as humans across the board.

What is Artificial Superintelligence (ASI)?

ASI goes a step beyond AGI.

Where AGI matches human-level intelligence, ASI artificial intelligence surpasses it in every aspect.

ASI would be able to think, reason, and solve problems far more efficiently than the most brilliant human minds.

Key characteristics of ASI include:

- Extreme Problem-Solving: ASI would be capable of solving complex problems in seconds—problems that would take humans decades to figure out.

- Unmatched Creativity and Innovation: With its vast intelligence, ASI could generate groundbreaking ideas and innovations across science, medicine, and technology.

- Self-Improving: ASI could enhance its own abilities, improving itself faster than humans ever could.

While AGI is still theoretical, ASI is a concept that pushes the boundaries of what AI could achieve.

H2: AGI vs ASI: Key Differences You Should Know

Now that we’ve defined AGI and ASI, let’s explore the key differences between the two:

- Intelligence Level:

- AGI is human-like intelligence. It can do anything a human can do but at the same level.

- ASI, on the other hand, is far beyond human-level intelligence. It would be far smarter than humans in almost every aspect.

- Scope of Ability:

- AGI can handle a wide range of tasks, but still within human limits.

- ASI has the potential to outdo humans in problem-solving, creativity, and even decision-making.

- Self-Improvement:

- AGI can improve its performance, but it requires human input.

- ASI would be self-improving, meaning it could upgrade its own capabilities at an accelerating rate.

- Impact on Humanity:

- AGI would complement human skills and tasks, making our lives easier.

- ASI, however, has the potential to change the world in ways we can’t yet imagine. It could either help humanity or pose a threat depending on how it’s controlled.

The Potential and Risks of AGI

AGI artificial intelligence has the potential to revolutionize the world.

Imagine machines that can:

- Assist with healthcare: Doctors could rely on AGI to analyze patient data and suggest treatment plans, speeding up diagnosis and improving outcomes.

- Solve global challenges: AGI could help solve problems like climate change, poverty, and hunger by providing innovative solutions.

- Enhance education: Personalized learning could be tailored to the needs of each student, improving learning experiences worldwide.

However, there are also risks:

- Job Displacement: As AGI takes over more tasks, some jobs may become obsolete.

- Ethical Concerns: With such power comes the responsibility of ensuring that AGI is used for good. If not controlled properly, it could lead to unintended consequences.

- Security: An AGI that’s too advanced could pose security risks, particularly if it becomes malicious or is exploited by bad actors.

The Potential and Risks of ASI

If AGI represents the pinnacle of human-like intelligence, ASI is the next leap forward.

The potential of ASI is immense:

- Revolutionary Advances in Science and Technology: ASI could accelerate scientific discoveries, creating new medicines, technologies, and even solutions to global problems.

- Improved Decision-Making: Governments and organizations could rely on ASI to make decisions based on data, potentially improving outcomes in everything from climate policies to economic strategies.

But with such power comes great risk:

- Unforeseen Consequences: An ASI with its own agenda might take actions that are harmful to humanity if not properly aligned with human values.

- Control Issues: If ASI is far smarter than humans, how do we ensure we can control it? Once it surpasses human intelligence, it may be difficult—or impossible—to manage.

- Existential Risk: Some experts warn that ASI could pose an existential risk if it turns against humanity, especially if its goals conflict with human survival.

Challenges and Possibilities – AGI vs ASI

Getting from where we are now to AGI or ASI is no small feat.

Here are some of the biggest challenges:

- Understanding Human Intelligence: To build AGI, we first need to understand how human intelligence works, something we don’t yet fully comprehend.

- Data and Learning: AGI and ASI require massive amounts of data to learn from, and we need the right systems in place to process that data effectively.

- Ethical and Legal Frameworks: Developing proper frameworks for the ethical use of AGI and ASI will be essential. We’ll need regulations to ensure that these technologies are used safely and responsibly.

However, the possibilities are endless:

- Global Solutions: AGI and ASI could help us solve complex global challenges, from curing diseases to managing climate change.

- Technological Advancements: These technologies could drive advancements we can’t yet imagine, opening up entirely new fields of study and innovation.

What Are the Ethical Concerns Around AGI and ASI?

As we advance toward AGI and ASI, ethical concerns must be addressed:

- Control: Who controls AGI or ASI? What happens if the wrong people gain control of such powerful technologies?

- Bias: If AGI and ASI are trained on biased data, they could perpetuate or even amplify existing biases.

- Privacy: With more intelligent AI systems, the risk to personal privacy increases. How do we protect individuals when machines can potentially access and manipulate personal data?

What to Expect in Future?

The future of AGI and ASI is full of possibilities and uncertainties.

While AGI is something we’re closer to achieving, ASI remains a distant, but highly impactful, goal.

As we continue to advance, we’ll need to ensure that both AGI and ASI are developed in ways that are beneficial to humanity.

The road ahead will be filled with challenges, but also great opportunities.

Conclusion

AGI vs ASI are two sides of the same coin, both offering immense potential and risks.

While AGI is about creating human-like intelligence, ASI is about surpassing it.

Both will shape the future of technology and humanity in profound ways.

As we move forward, the key will be to develop these technologies responsibly, ensuring that they serve humanity and not the other way around.